JO ANN WARNER – Associate Director, Western Extension Risk Management Education Center

REBECCA SERO, Ph.D. – Evaluation Specialist, Washington State University Extension

Program evaluation is essential to the success of the Extension Risk Management Education (ERME) program. ERME’s online application and reporting system integrate results verification – methods the project team will use to evaluate and measure producer results (outcomes) – into the project’s scope of work. In this article, we will address the mechanics of results verification through the lens of program evaluation. By doing so, we will demonstrate how it can be effectively used to both document risk management impacts and accumulate stories of success from farmers and ranchers.

The worth of public value level impacts are linked inextricably to your evaluation findings (Kalambokidis, 2013). Evaluation seeks to gain insights about your target audience – to find out what works for them and what does not (Patton, 2008). It documents improvements and changes in practice to strengthen the risk portfolios of farm and ranch operations; and to assess how well program participants are able to meet their risk management goals and objectives. Well thought out program evaluation builds the risk bearing capacity for farmer and rancher participants; as well as within your program and community of stakeholders, and strengthens accountability to the funding organization and other partners.

Another primary purpose of evaluation is to evaluate the programs assets and weaknesses such that appropriate changes can be made for improving the quality of the programs offered and to assess how well it did to accomplish the stated objectives (Patton, 2008). This may determine how you will plan for and develop future programs.

The evaluation process (and design) begins when conceiving of and planning a new program or project and involves several stages throughout the life of a project and after it has ended. The information that you seek from participants will change at each stage of program delivery; as will the type of evaluation best suited to obtaining the desired information. The types of evaluation critical to outcome/results based programs such as ERME are needs assessment, outcome evaluation and impact evaluation. Process evaluation is integral too as it measures how well the project team and collaborators are executing the program and their accountability towards participants reaching the desired outcomes.

Process Evaluation

Program team members would conduct a process evaluation in order to understand what happens during the implementation of a program (Saunders, 2015). The results from a process evaluation can then serve as a road map for how you want your project to be implemented and how you want your team and partners to function.

What you have laid out in your scope of work (Project Steps) and what you actually do can sometimes be two different things. The Progress Notes section of an ERME Progress report makes it easy to record detailed data on the processes associated with program delivery. For example, questions asked might be: Was the program delivery consistent?; Were the activities delivered as initially conceived?; Were adequate tools and resources provided for participants to help them achieve changes in practice?; Was it a good learning experience for participants?; and How did the participants view the exchange? Additional questions to ask might include whether participants had adequate access to the team and partners and whether your project team was able to reach the anticipated number of producers.

Good process evaluation can provide a clear path for what you want to do next; it allows you to document your strengths and weaknesses as an organization and/or program, to determine your level of effectiveness and how to structure improvements (Saunders, 2015).

Needs Assessment

The ERME program places a high priority on an applicant organizations ability to determine producer demand – understanding and then articulating what the participants’ motivations are for taking part in your proposed project or training. A needs assessment can be used to understand the characteristics and priorities of your target population (Witkin & Altschuld, 1994). This may be especially helpful if you are starting from scratch and have no prior knowledge of your target audience, or are one or two steps removed from them. A needs assessment can also help secure credibility within the community. It can also identify potential challenges and/or barriers to participants’ ability to achieve the desired outcomes/results. This may result in modifications to curriculum, delivery methods and/or other approaches in order to make programming more appropriate for your audience (Witkin & Altschuld, 1994).

A needs assessment allows your target audience to define the relevance (or not) of the risk management topic(s) being proposed and then to provide valid evidence back to you that the issue needs to be addressed. Stakeholders who are aligned with your target audience (commodity and/or grower organizations), and others within the sphere of influence of your target audience, may also provide value in helping to define relevant factors which support the need for training or education. Having an established relationship with your farm/ranch audience can make it easier to conduct a needs assessment or you may already have benchmarking data in the way of their knowledge levels and other factors that support the need for future programs.

Outcome Evaluation

Outcome Evaluation is used to determine the (risk management) changes in practice achieved by your farm/ranch audience because they participated in your program. It attempts to link the changes they made to a specific part of the program. The Results and How will you verify section of the ERME application makes it easy to link these changes.

Conducting an outcome evaluation helps the project team to determine to what extent participants are making the proposed changes and if the program delivery is actually helping the farm/ranch participants to achieve their goals. It also shows the degree to which participants are benefitting from the training or program activities. Outcome evaluation also enables the project team to identify what is working well for participants and what pitfalls may be getting in the way of their success (Hoggarth & Comfort, 2010). Finally, another strength of outcome evaluation is that it documents unintended or unexpected results. Sometimes these successes can have as much if not more value to participants than the outcomes initially proposed by the project team (Morell, 2005)

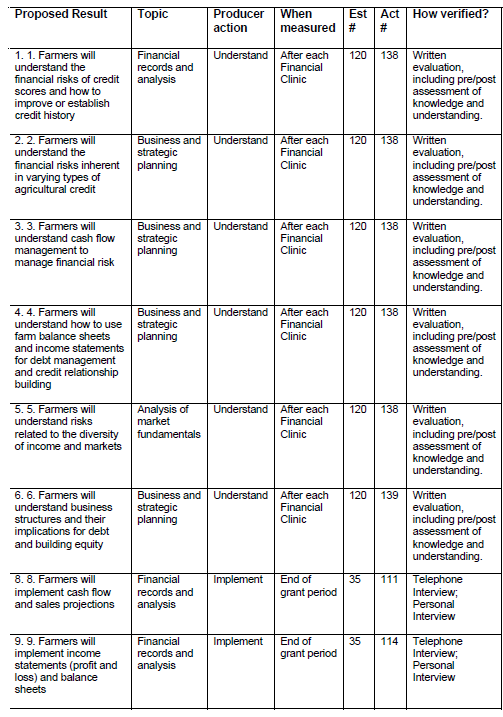

Outcome evaluation is critical to the success of an ERME project because it measures/verifies short and medium term results achieved by participants throughout and by the end of an 18-month project. Figure 1 shows a progression of proposed risk management outcomes/results and identifies at what point in the program delivery it is anticipated that participants will be able to make these changes.

Impact Evaluation

When wanting to understand the impact – or effect – of a program, an impact evaluation should be conducted. This type of evaluation attempts to answer the cause-and-effect question (Gertler, Premand, Rawlings, & Vermeersch, 2016).

Because you are attempting to establish causality, impact evaluation requires certain methodologies be used, thus resulting in a need for more time and effort on the part of the project team. However, this evaluation has the capacity to examine more in-depth, longer run changes. This impact is measured not only at the individual program participant level – where an attempt to understand whether longer term changes in practice have been achieved – but also to measure impacts that may have positively impacted communities (Kellogg Foundation). For example, an impact evaluation can help a project team examine the economic, social and environmental gains that have occurred on a community level.

An impact evaluation can also help to show whether the project yielded a good return on investment for the resources utilized (Gertler, et al, 2016). The degree of public value level impacts, including expanding program capacity and benefits realized by all stakeholders in the public and private sector, can be one of the greatest indicators of success (Kalambokidis, 2013).

Impact Reporting

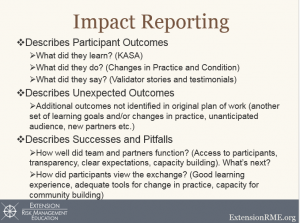

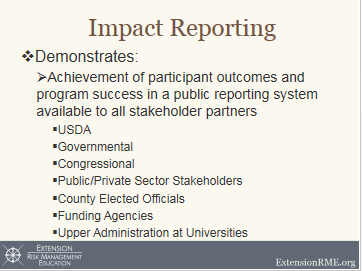

Using an impact report to capture and share the information from a project evaluation as a demonstration of the achievement of participant outcomes and program successes has great value for stakeholder partners; this is especially true in a public reporting system (Figures 2 and 3). A good impact report will be able to articulate the evaluation results or findings from each of the four types of evaluation – needs assessment (demonstration of input and buy in from the target audience); process (demonstrated accountability of team and partners); outcome (evidence of knowledge, awareness and skills gained by participants, changes in practice and condition and stories of success); and impact (evidence of long run outcomes with positive results for participants and the larger community).

Putting it All Together

In the ERME program, outcomes/results achieved by producer participants is an expectation of program delivery. The combination of pairing realistic outcomes for program participants, with a well-designed evaluation system, can lead to relevant and long lasting impacts. Attempts to develop appropriate outcomes for a program audience may be diminished if the applicant organization lacks a clear understanding of the types of outcomes being sought by the funder. If you are unsure of what may constitute good risk management outcomes, a signature resource is the Introduction to Risk Management (pdf). This document clearly defines agricultural risk categories along with choices, decision aids and other changes in practice or strategy needed to keep farm and ranch operations viable. Because there is great diversity in farming in terms of size, crops and livestock produced, as well as in the knowledge level and culture of your target audience, outcomes will need to be tailored to meet these variables. If priorities have been established by the funding organization, determine if they will fit within the scope of your proposed outcomes.

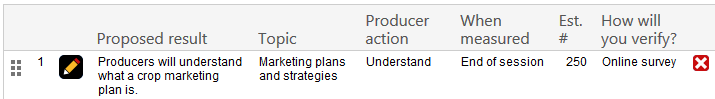

In the “How will your verify” column of the Results section in the ERME application/reporting system, the type of data collection (evaluation) instrument will depend upon what you want to know and what kind of information you will need to gather from participants that will verify the achievement of each the proposed outcomes. The “When measured” column makes it easy to align each outcome with your program delivery schedule (Figure 4).

To be effective, data collection tools should be decided upon and/or conceived of in the same timeframe that you are developing a proposed set of participant outcomes. Otherwise it may stand in the way of gathering accurate data. Use technology to the greatest degree possible; for example, making use of Excel spreadsheets and online data collection tools, such as Survey Monkey, will help to organize your data collection process. Streamline the process from start to finish with a well thought out plan for measuring (1) short-term outcomes (knowledge gained, actions taken), (2) mid-term outcomes (what participants did differently through actions and practice) through follow up evaluations, which may occur within 3 to 6 months after program delivery; and (3) long-term impacts (changes in condition of the environment, community, economy, and so forth).

Finally, re-visit your proposed risk management outcomes and think about each of the following: Does the focus of your outcomes accurately reflect the risk management topics and issues you will be covering? Are the outcomes achievable and pragmatic? And finally, do the outcomes provide a current roadmap for the risk management progress you want participants to achieve? A healthy review of your proposed outcomes and data collection techniques during the application process, after the program is funded and before program delivery begins, while your program is being delivered, and lastly, at the end of your program, will ensure an evaluation process that can successfully assess producer risk management results.

A later article will address evaluation specifics using the Logic Model as a framework for evaluation and verifying risk management results.

Ripple Effects Mapping (REM)

Another evaluation technique that lends itself well to impact reporting is Ripple Effects Mapping (REM). The next article will feature a project in Utah that used REM with a ranching family, which resulted in unforeseen positive changes; including helping them to address and implement risk management practices not covered in the program delivery. Look for an in depth article on the mechanics of Ripple Effects Mapping in our next newsletter. In the meantime you may click on the following resource link for more information on Ripple Effects Mapping.

References & Citations:

Crane, L., Gantz, G., Isaacs, S., Jose, D., & Sharp, R. (2013). Introduction to risk management (2nd Ed.). Extension Risk Management Education and Risk Management Agency. Retrieved from: http://extensionrme.org/pubs/Intro-Risk-Mgmt.pdf.

Gertler, S., Premand, P., Rawlings, L., & Vermeersch, C. (2016). Impact evaluation in practice, (Second Ed.). World Bank: Washington, D.C.

Hoggarth, L. & Comfort, H. (2010). A practical guide to outcome evaluation. London: Jessica Kingsley Publishers.

Kalambokidis, L. (2013). Proceedings from Agricultural and Applied Economics Association (AAEA) Conference: ; Tell us about your extension program’s public value-level impacts. Washington D.C.

Morell, J. (2005). Why are there unintended consequences of program action, and what are the implications for doing evaluation? American Journal of Evaluation, 26. 444-463.

Patton, M.Q. (2008). Utilization-focused evaluation. Los Angeles: Sage.

Saunders, R. (2015). Implementation monitoring and process evaluation. Los Angeles: Sage.

Warner, J.(2013). Proceedings from Agricultural and Applied Economics Association (AAEA) Conference: Designing outcome/results based programs for participant success & impact reporting. Washington D.C.

Witkin, B. & Altschuld, J. (1994). Planning and conducting needs assessments: A practical guide. Los Angeles: Sage

W.K Kellogg Foundation (n.d.). Evaluation handbook. Battle Creek, MI: Sanders, J.

More from Fall 2016 Newsletter

Introduction

Introduction

Risk management education will play a significant role for America’s farm and ranch families.

Risk management education role »

Managing Financial Risks South Central Utah

Managing Financial Risks South Central Utah

This project addressed the financial and production risks of beginning and progressing farmers and ranchers in south central Utah.

Managing risks for farmers & ranchers »